Then, we define a metric function to measure the similarity between those representations, for instance euclidian distance. To use a Ranking Loss function we first extract features from two (or three) input data points and get an embedded representation for each of them. Using a Ranking Loss function, we can train a CNN to infer if two face images belong to the same person or not.

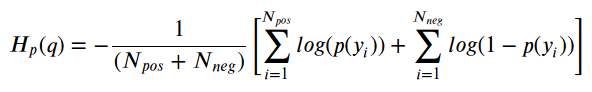

#Binary cross entropy loss verification

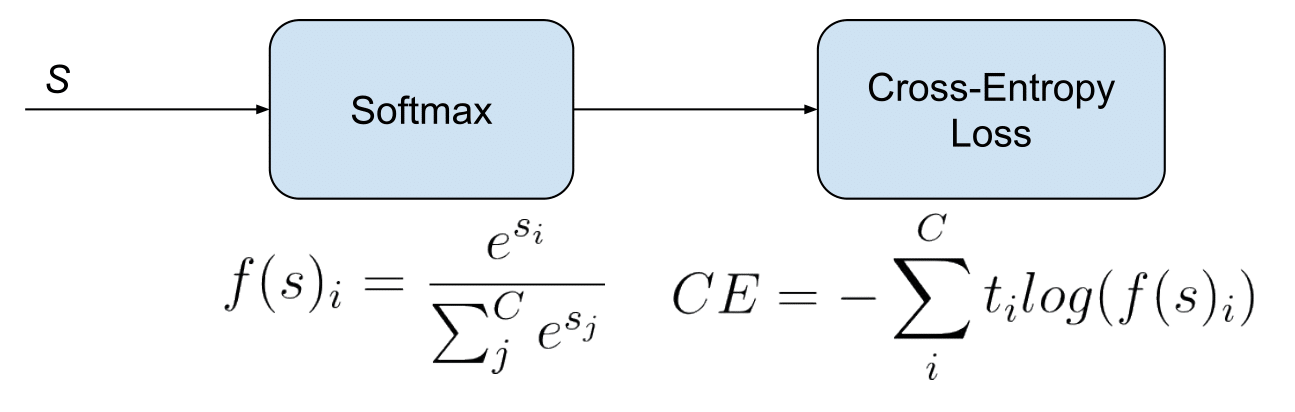

As an example, imagine a face verification dataset, where we know which face images belong to the same person (similar), and which not (dissimilar). That score can be binary (similar / dissimilar). Ranking Losses functions are very flexible in terms of training data: We just need a similarity score between data points to use them. This task if often called metric learning. Unlike other loss functions, such as Cross-Entropy Loss or Mean Square Error Loss, whose objective is to learn to predict directly a label, a value, or a set or values given an input, the objective of Ranking Losses is to predict relative distances between inputs. That’s why they receive different names such as Contrastive Loss, Margin Loss, Hinge Loss or Triplet Loss.

Ranking Losses are used in different areas, tasks and neural networks setups (like Siamese Nets or Triplet Nets). Understanding Ranking Loss, Contrastive Loss, Margin Loss, Triplet Loss, Hinge Loss and all those confusing namesĪfter the success of my post Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names, and after checking that Triplet Loss outperforms Cross-Entropy Loss in my main research topic ( Multi-Modal Retrieval) I decided to write a similar post explaining Ranking Losses functions.

0 kommentar(er)

0 kommentar(er)